Here we go: https://medium.com/@akiselev87/common-documentation-storage-why-and-how

Here is the feedback I found today in my Linkedin feed. I’m very excited because usually I got comments and ratings in my private chats only

Hello everybody! My name is Alexey Kiselev and I am a Lead Business Systems Analyst with 10+ years of experience. I have taught a number of in-person courses (something about 15), video courses like this one from Udemy with more than 2500 students from 120 countries. My last training in Russian was attended by 280 students, an English version was limited to 12 persons.

I started my career in 2008 as a Business Analyst, nowadays I am working with Grid Dynamics and Apple Inc. I built a couple asset management systems for oil corporations, federal government statistic storage, a number of different Case Management solutions, improved couple of platforms for budgeting and Case Management, etc.

Why do we need this training in general?

My main aim is to give you an opportunity to complete the real application requirements from idea to implementation. No long lectures, just tasks and challenges with my help and guidance (and some useful hyperlinks). The better performance – the better results.

What are we going to do with our 1 month training?

Oh, this is obvious – we are going to study. A lot.

It will be no friendly customer – only my skeptical view and a limited time. Do not expect a lot of pure BPMN and UML because I’m a bit tired of them and analysis is mostly about your brain and analytical skills and techniques – not just about diagrams. I wish we could also do implementation and testing, but once again, this is 1 month express training.

How are we going to do that?

I’ll provide at least 2 feedback sessions for all your documents. This is not a boring theoretical course (if you need a boring one – you can get access by following this hyperlink). My feedback will be available via Google Class comments, via the Telegram text chat form 8 am – 10 am and 4 pm – 8 pm Pacific Standard Time (PST) weekly.

No webinars or calls planned.

What will you receive by the end of the training?

My respect and reference via Linkedin. Why respect? Because it seems OK to me to remove people from the group with no refund if they are not actively working for any reason (if you already have an intensive project at work – please do not apply). You will also get a free certificate from Udemy.

Who will find this training useful?

It seems to me that 1-5 years of experience will be best to fit the basic training requirements. All you need is a theoretical base.

What is the length of the training?

Around one month.

When are we going to start?

October 2nd.

Price

I don’t see any sense in excluding 95% of students during my training, so I will charge you for motivational amount (you can donate to WWF or any other organization) . This is non-refundable even if you were extracted from the training.

How do I become a student?

Just apply via google form: https://goo.gl/forms/dUI1Btlsm4Af725U2

There is a limited amount of spots there.

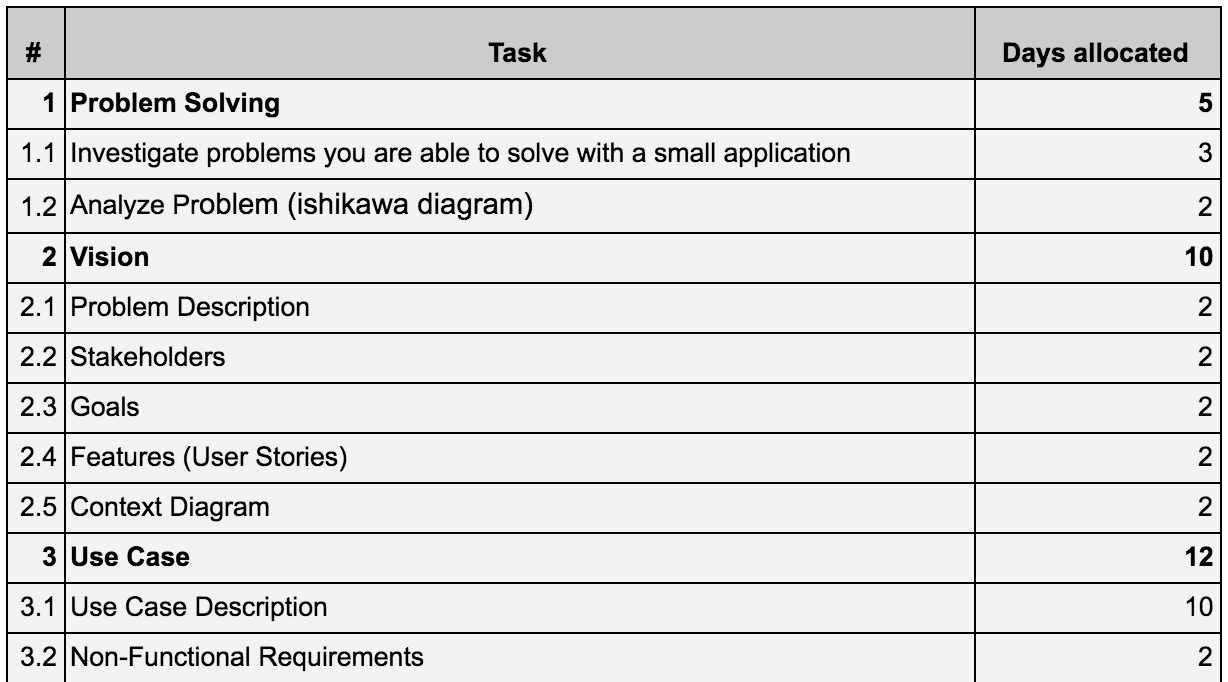

Approximate Schedule

Hello everybody! My name is Alexey Kiselev and I am a Lead Business Systems Analyst with 10+ years of experience. I have taught a number of in-person courses (something about 15), video courses like this one from Udemy with more than 2500 students from 120 countries. My last training in Russian was attended by 280 students, an English version was limited to 12 persons.

I started my career in 2008 as a Business Analyst, nowadays I am working with Grid Dynamics and Apple Inc. I built a couple asset management systems for oil corporations, federal government statistic storage, a number of different Case Management solutions, improved couple of platforms for budgeting and Case Management, etc.

Why do we need this training in general?

My main aim is to give you an opportunity to complete the real application requirements from idea to implementation. No long lectures, just tasks and challenges with my help and guidance (and some useful hyperlinks). The better performance – the better results.

What are we going to do with our 1 month training?

Oh, this is obvious – we are going to study. A lot.

It will be no friendly customer – only my skeptical view and a limited time. Do not expect a lot of pure BPMN and UML because I’m a bit tired of them and analysis is mostly about your brain and analytical skills and techniques – not just about diagrams. I wish we could also do implementation and testing, but once again, this is 1 month express training.

How are we going to do that?

I’ll provide at least 2 feedback sessions for all your documents. This is not a boring theoretical course (if you need a boring one – you can get access by following this hyperlink). My feedback will be available via Google Class comments, via the Telegram text chat form 8 am – 10 am and 4 pm – 8 pm Pacific Standard Time (PST) weekly.

No webinars or calls planned.

What will you receive by the end of the training?

My respect and reference via Linkedin. Why respect? Because it seems OK to me to remove people from the group with no refund if they are not actively working for any reason (if you already have an intensive project at work – please do not apply). You will also get a free certificate from Udemy.

Who will find this training useful?

It seems to me that 1-5 years of experience will be best to fit the basic training requirements. All you need is a theoretical base.

What is the length of the training?

This is a critical change – I must finish it till the end of September. Please have a look at the schedule to get a basic understanding why this is a problem.

When are we going to start?

September 5th.

How do I become a student?

Just apply via google form: https://goo.gl/forms/CMEoJiEWWZeh52ft2

There is a limited amount of spots there.

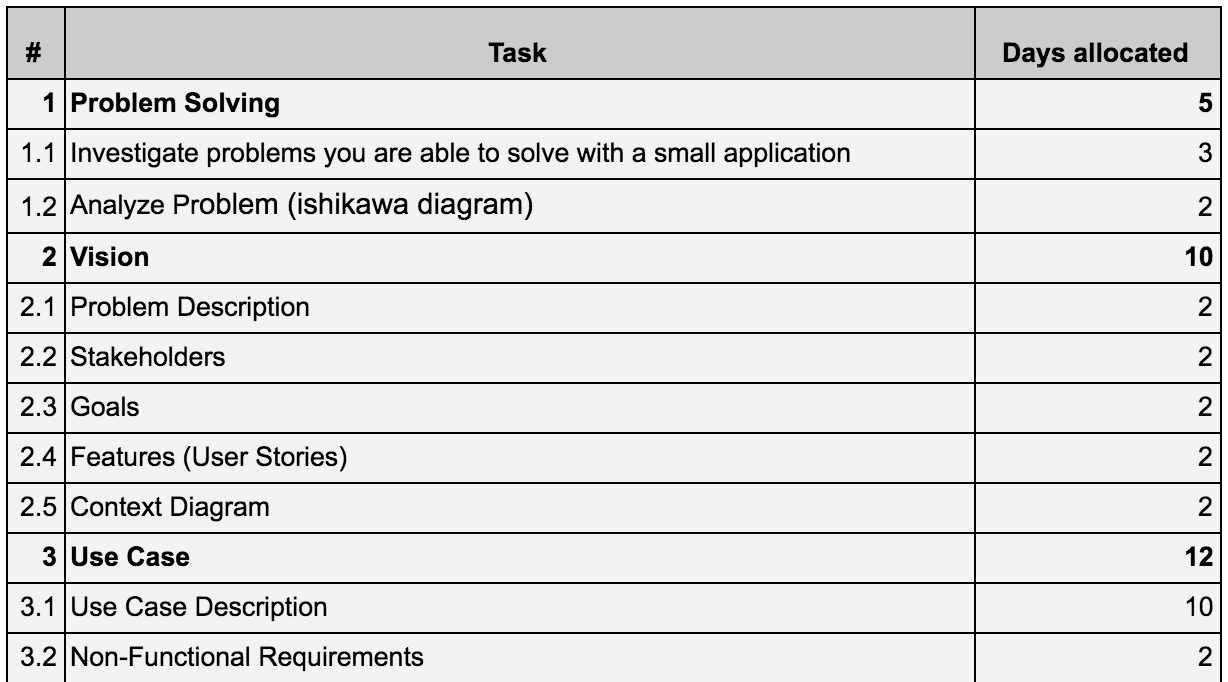

Approximate Schedule

Today I found out that my Udemy “Requirements from idea to implementation. BA and SA guide” course is a bestseller:

I started my career in 2008 as a Business Analyst. Since then I worked as a Business Analyst, Systems Analyst, Product Owner, Test Automation and Software Engineer. I worked with custom development projects (for example I made Federal State Statistics Unit Warehouse and Portal) and I worked with products (Case Management System, Customer Relationship Management Systems, etc), so this course is an overview of technologies, practices and tips I used during my career.

I also got couple other Udemy courses, more then 15 articles and some on-site and online courses. This course build accordingly problems I saw during educational process and I really tried to highlight that (especially you will hear a lot of “concentrate on a problem first” tips).

So, to build this course, we had a group of 10 BA-SA volunteers and we started with usual problems BA-SA got, set a course structure you can hear in introduction and wrote something like a 600 pages book, which you will find at the end of the course as a downloadable PDF attachment. This is a base information for the theoretical part. We also researched templates, prepared a set of our favorites and you’ll be able to download these documents templates. Then we made a practice section based on example from one of my practical online course I made at the end of summer 2017 (you’ll get PDF practice examples as well, but they are not 100% done because we would like to show you a process of creating requirements).

Please note that we analyzed more then 900 web sites to combine all necessary theory for you (all these hyperlinks available at the second PDF file with theory).

Here is the link:

Discount limited promo code is – AKDISCOUNT2018

PS.

I also would like to say special thanks to my colleagues, who really helped to control quality of all

materials, examples, etc:

● Evgenia Pavlova

● Olesia Volodina

● Maria Begouleva

● Julia Dorozhkina

● Anna Letunovsky

● Dmitry Ivannikov

And there were a bunch of people who were also involved in creating of all these materials:

● Daria Volkova

● Ekaterina Ilyashina

● Julia Vasilieva

And many thanks to Ekaterina Gert for the title image.

Prerequisites: Cucumber Again. From Requirements to Java Test

To create any simple Cucumber+Java test, we will need to create a glue file, that is pretty simple to do, because, if you don’t need all vhistles and blows on your first step – this will be enough:

package com.cucumber.StepDefinitions;

import cucumber.api.CucumberOptions;

import cucumber.api.junit.Cucumber;

import org.junit.runner.RunWith;

@RunWith(Cucumber.class)

@CucumberOptions(

features="src/test/resources/feature",

glue = "com.cucumber.StepDefinitions"

)

public class CukesRunner {

}

My favorite report engine is ExtentReports, so, let’s add a proper class:

package com.framework.common;

import com.relevantcodes.extentreports.ExtentReports;

import com.relevantcodes.extentreports.ExtentTest;

import com.relevantcodes.extentreports.LogStatus;

import org.testng.annotations.AfterSuite;

public class ExtentReport {

public static ExtentReports extentReports;

protected static ExtentTest extentTest;

public static ExtentReports getExtentReport() {

if (extentReports == null){

extentReports = new ExtentReports(System.getProperty("user.dir") +"/ExtentReport/index.html", true);

}

return extentReports;

}

public static ExtentTest getExtentTest(String testName) {

if (extentReports == null) {

getExtentReport();

};

if (extentTest == null){

extentTest = extentReports.startTest(testName);

extentTest.log(LogStatus.INFO, testName + " configureation started");

}

return extentTest;

}

@AfterSuite

public void endSuite(){

ExtentReport.getExtentReport().close();

}

}

and implementation is extremely simple:

ExtentReport.getExtentTest(getClass().getSimpleName()).log(LogStatus.INFO, "Run test for " + className);

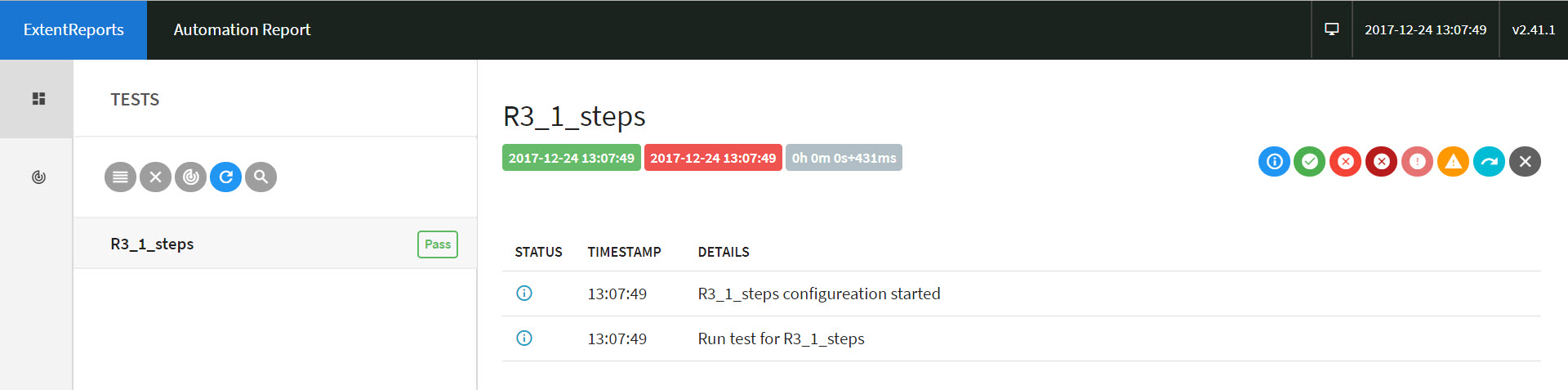

This is how it looks like:

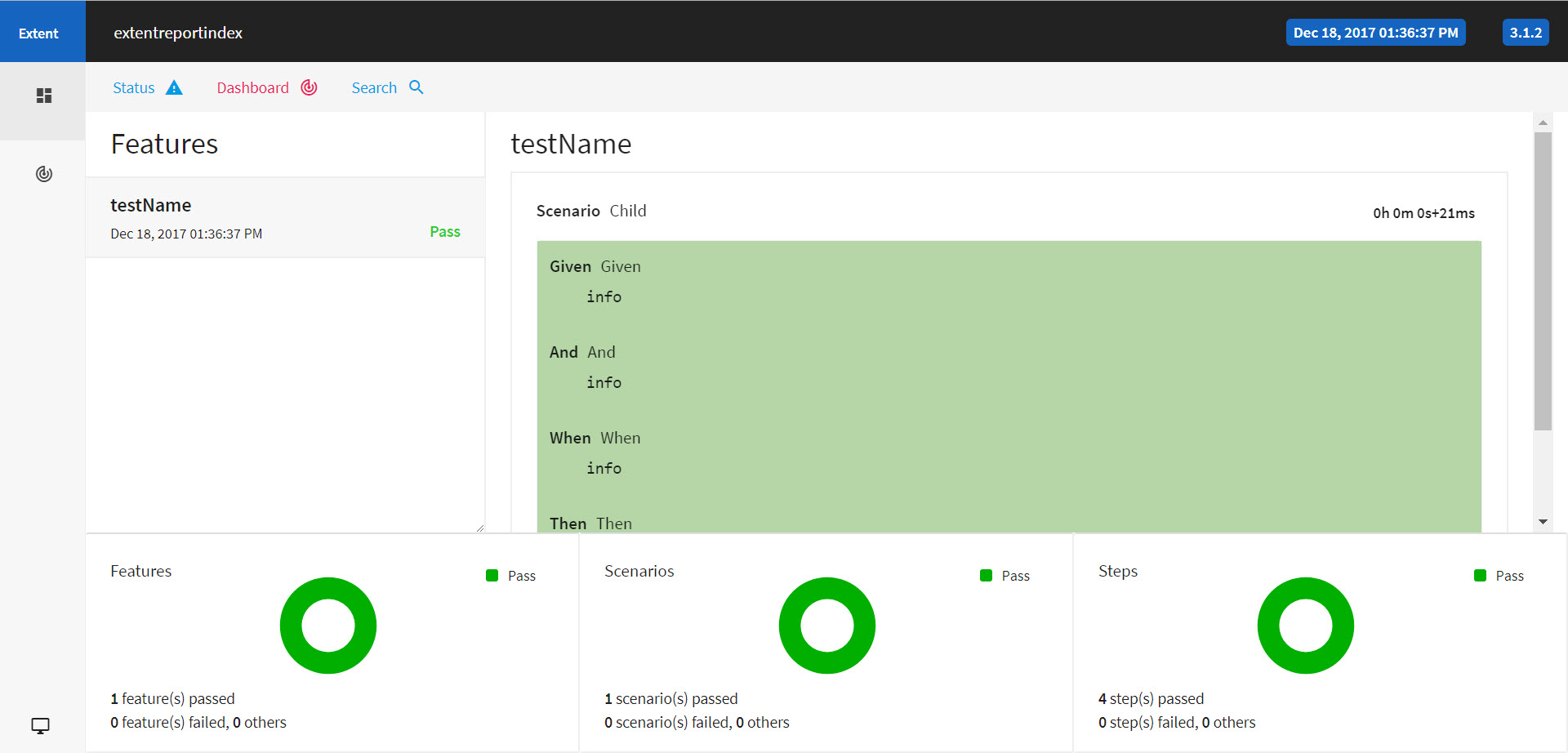

After that I tried to research if there are any ready to go plugins or examples about how to make reports in Gherkin-like format. I easily found one, but that was working with no problems only with Eclipse. Here is an example – (https://automationissues.wordpress.com/2017/09/29/cucumber-extent-reporting/):

So, this is another one article abut cucumber, but I’ll try to describe SDLC methodology. If you skipped posts about correct Gherkin/Cucumber format , please have a look here: Cucumber — bridge or another one abyss between BA and QA

If you would like to try Java+Cucumber, please, have a look here: Create a Cucumber Project by Integrating maven-cucumber-selenium-eclipse

But if you would like to get a overview on a process, please, continue reading. As every educated Analysts, as a result of our work, we got a list of requirements. We know that we could have functional and non-functional requirements. This is my typical workflow to deliver requirement to QAs:

If we would like to test particular requirement, then you are:

So, it would be great to find one particular Use Case and run it from the one place, without tons of Word document (+ xls tables as an option). The problem is – Cucumber is not about User Stories, Use Cases, etc. Let’s answer couple important questions:

I would place User story and Feature at the same detail level, but the problem is: User Story is way too long for the Cucumber Feature. Why? Because Cucumber Feature is a simple text file, while Feature Name is a name of this file! This looks nice on the different demo, where teachers got “MyFeature1” and “MyFeature2”, but in fact, we should split feature files into groups via packaging, otherwise this will be a disaster.But anyway, we will not be able to put all these “As a User”, so, here is the key thing why they got features, because if we are using User Stories, we got space only for ” < some goal >” from “As a < type of user >, I want < some goal > so that < some reason >”.

There is also another one method we could use – add requirement number, this will extremely help us to support consistency and traceability. Continue reading

Here is my new Udemy “Requirements from idea to implementation. BA and SA guide” course . 86 lectures with examples and templates, etc. Will be available in January 2018.

I started my career in 2008 as a Business Analyst. Since then I worked as a Business Analyst, Systems Analyst, Product Owner. I worked in custom development projects (for example I made Federal State Statistics Unit Warehouse and Portal) and I worked with products (Case Management System, Customer Relationship Management Systems, etc), so this course is a overview of technologies, practices and tips I used during my career.

I also got couple other Udemy courses, more then 15 articles and some on-site and online courses. This course build accordingly problems I saw during educational process and I really tried to highlight that (especially you will hear a lot of “concentrate on a problem first” tips).

So, to build this course, we had a group of 10 BA-SA volunteers and we started with usual problems BA-SA got, set a course structure you can hear in introduction and wrote something like a 600 pages book, which you will find at the end of the course as a downloadable PDF attachment. This is a base information for the theoretical part. We also researched templates, prepared a set of our favorites and you’ll be able to download these documents templates. Then we made a practice section based on example from one of my practical online course I made at the end of summer 2017 (you’ll get PDF practice examples as well, but they are not 100% done because we would like to show you a process of creating requirements).

Please note that we analyzed more then 900 web sites to combine all necessary theory for you (all these hyperlinks available at the second PDF file with theory).

I really hope you will enjoy this course and please let me know if you would like to learn some more or would me to add some clarifications.

Thank you!

One of the simplest Cucumber tutorial

This blog will explain the steps to follow for creating a simple Cucumber project.

View original post 1,009 more words

Free on-line course «Practices for Systems Analysts to Write Implementation Tasks»

https://www.udemy.com/sa-implementation-tasks/

This course will tell you how to write proper tasks for your developers, if you got any UI with set of fields.

What you will leard from it?

• How to write tasks for developers (UI with fields, grids, buttons)

• What is the high level difference between SQl and NoSQL

• How REST API works

Cucumber is a tool based on Behavior Driven Development (BDD) framework which is used to write acceptance tests for web application. It allows automation of functional validation in easily readable and understandable format (like plain English) to Business Analysts, Developers, Testers, etc.

Cucumber feature files can serve as a good document for all. There are many other tools like JBehave which also support BDD framework. Initially Cucumber was implemented in Ruby and then extended to Java framework. Both the tools support native JUnit.

Behavior Driven Development is extension of Test Driven Development and it is used to test the system rather than testing the particular piece of code. We will discuss more about the BDD and style of writing BDD tests.

Cucumber could be used along with Selenium and Java and this is more than enough to make me happy.

In order to understand cucumber we need to know all the features of cucumber and its usage.

#1) Feature Files:

Feature files are essential part of cucumber which is used to write test automation steps or acceptance tests. This can be used as live document. The steps are the application specification. All the feature files ends with .feature extension.

Sample feature file:

Feature: Login Functionality Feature In order to ensure Login Functionality works, I want to run the cucumber test to verify it is working Scenario: Login Functionality Given user navigates to SOFTWARETETINGHELP.COM When user logs in using Username as “USER” and Password “PASSWORD” Then login should be successful Scenario: Login Functionality Given user navigates to SOFTWARETETINGHELP.COM When user logs in using Username as “USER1” and Password “PASSWORD1” Then error message should be thrown

Let’s start with something simple – there are three universally supported image formats: GIF, PNG, and JPEG. In addition to these formats, some browsers also support newer formats such as WebP and JPEG XR, which offer better overall compression and more features. So, which format should you use?

At first – we should do a good work with images itself (this part is so boring, so, I’ll just provide a short list of recommendations):

Other than photos and thumbnails, your website most likely includes many icons (arrows, stars, signs, marks) and auxiliary images. Google’s search results page is comprised of over 80 (!) tiny icons.

A simple solution for this problem is to utilize a CSS Sprite, a single image that contains all your smaller icons. Your web page is modified to download this single image from your server and the page’s HTML uses alternative CSS class names to point to the smaller images within the larger one.

Now, instead of 80 images, Google’s visitors download just a single image. Their browser will quickly download and cache this single image from Google’s servers and all images will be immediately visible.

To be honest, would also highly recommend Font Awesome to be implemented at your page.

So, as a next step I decided to implement Avatars, News Feed and State Machine.

Avatars

That was pretty simple to add avatars – add module which will handle image upload/cropping for future upload to the DB Avatar field. I found couple modules to add, but they are not 100% working:

So, finally I found ngImgCrop module for this purpose.

State Machine

I used classic state machine to handle friendship statuses (and errors), so, I draw a pretty simple scheme with transitions and after that – excel file with description of every transition (including errors handling with proper messages). You will never see this error messages unless you would like to modify existing RESR URLs to try to approve friendship of some unknown user and etc.

Cause this is a pretty simple and well known area – here is some theoretical description and implementation of the state machine:

code snapshot:

case "Restore Subscription":

switch (relationFriend){

case 0: errorExists = 1;errorText = "There is no ignored friend. "; break;

case 1: errorExists = 1;errorText = "There is no ignored friend. "; break;

case 10: errorExists = 1;errorText = "There is no ignored friend. "; break;

case 11: errorExists = 1;errorText = "There is no ignored friend. "; break;

case 12: errorExists = 1;errorText = "There is no ignored friend. "; break;

case 20: errorExists = 1;errorText = "There is no ignored friend. "; break;

case 21: errorExists = 1;errorText = "There is no ignored friend. "; break;

case 22: errorExists = 1;errorText = "There is no ignored friend. "; break;

case 23: errorExists = 1;errorText = "There is no ignored friend. "; break;

case 30: newRelationFriend=21; break;

} break;

case "Unsubscribe":

News Feed

In fact, news feed is a somewhat like a messaging, but with the different security and CQL statement of course. Therefore, new requirements for the New Feed are:

I don’t want to make a full description for each requirement and don’t want to describe User Stories because I’m a Facebook user and requirements on this level are pretty obvious and straight forward. I skipped some of these requirements during implementation and will implement them during some of the next iterations.

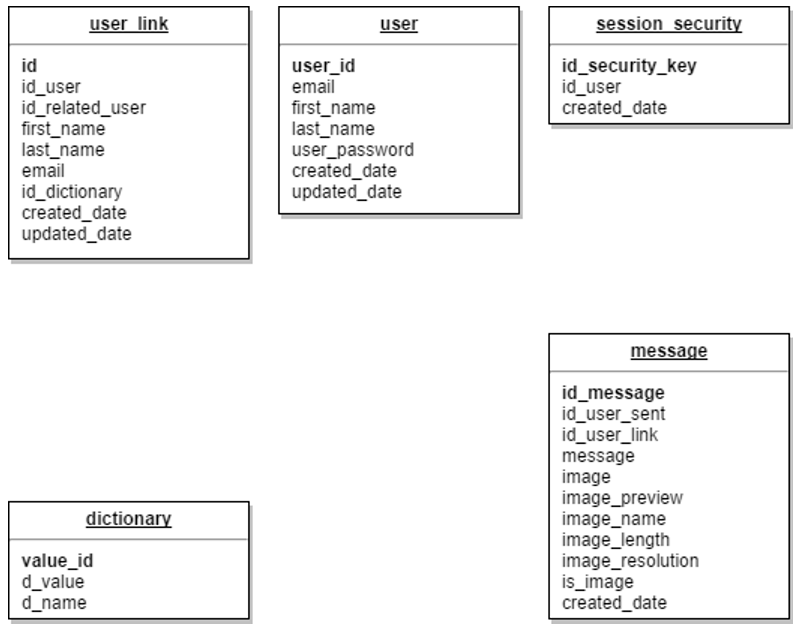

Let me describe my current database structure (I used draw.io for that):

Let me introduce DB changes:

1) Introduce new object to store News Feed items

2) Add fields to store avatars

So, as a result I got this demo video and a bunch of items for the next version at my backlog.

I’ll research the best way to make a connection from my Java to Cassandra here. There are a lot of examples how to do that, but the main thing, but I’m developing some kind of chat application on my localhost (will do single insert/update statements, etc.) when all this Spark examples are perfect for analytical workflows.

The first one example is Spark 1.6:

public static JavaSparkContext getCassandraConnector(){

SparkConf conf = new SparkConf();

conf.setAppName("Chat");

conf.set("spark.driver.allowMultipleContexts", "true");

conf.set("spark.cassandra.connection.host", "127.0.0.1");

conf.set("spark.rpc.netty.dispatcher.numThreads","2");

conf.setMaster("local[2]");

JavaSparkContext sc = new JavaSparkContext(conf);

return sc;

}So, I also got an example for Spark 2.x where the builder will automatically reuse an existing SparkContext if one exists and create a SparkContext if it does not exist. Configuration options set in the builder are automatically propagated over to Spark and Hadoop during I/O. Continue reading

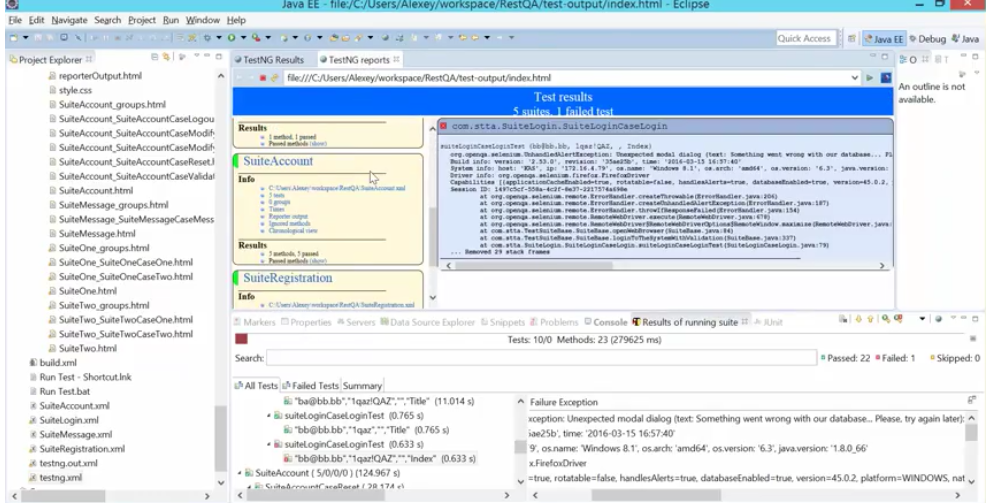

Here is a short comparison of for most popular ways to build test report for TestNG + Java framework (with comparison table in the end of the post).

Log4J

This is a pretty simple logger which throws test suite execution to the Windows Console, Eclipse Console and text file.

There is no screenshot capturing or easy navigation between multiple suites, so, this is just a huge text file.

Introduction to Automation testing:

Testing is an essential part of a software development process. While testing intermediate versions of products/projects being developed, testing team needs to execute a number of test cases. In addition, prior to release every new version, it is mandatory that the version passed through a set of “regression” and “smoke” tests. Most of all such tests are standard for every new version of product/project, and therefore can be automated in order to save human resources and time for executing them.

Benefits of using automated testing are the following:

Automation workflow for the application can be presented as follows:

Goal:

The goal of this framework is to create a flexible and extendable automated testing framework, which should expand test coverage for as many solutions as possible. Framework must have input and output channels and library of methods to work with UI.

Environment Specifications:

This is a framework with test suites which allows me to test my Simple Chat Application UI in 5 minutes.

I could provide bitbucket project access upon request and this is a link to original chat application.

This is a simple restfull chat application to send messages between friends. Key features:

Link to the project upon request.

Persistence and Stickiness for SA dictionary:

When customers visit an e-commerce site, they usually start by browsing the site. Depending on the application, the site may require that the client become persisted (stuck) to one server as soon as the initial connection is established, or the application may require this action only when the client starts to create a transaction, such as when building a shopping cart.

For example, after the client adds items to a shopping cart, it is important that all subsequent client requests are directed to the same real server so that all the items are contained in one shopping cart on one server. An instance of a customer’s shopping cart is typically local to a particular server rather than duplicated across multiple servers.

E-commerce applications are not the only types of applications that require a sequence of client requests to be directed to the same real server. Any web applications that maintain client information may require stickiness, such as banking and online trading applications, or FTP and HTTP file transfers.

More info: Cookies, Sessions, and Persistence

Bubble Sort

Merge Sort

Quick Sort

Max Heap Deletion

Hello everybody! My name is Alexey Kiselev and I’m a Head of a Systems Analysts Department with 10+ years of experience. I did multiple courses (something about 15), video courses like this one from Udemy with more than 2500 students from 120 countries, my last Russian language course attended by 280 students, I also got a blog with more than 100 000 views (this one).

I started my career in 2008 as a Business Analyst, since 2013 I’m mostly playing a Systems Analyst’s role. I built couple asset management system for oil corporations, government statistic storage, amount of different Case Management solutions, improved couple platforms for budgeting and Case Management, etc.

I received more than 30 requests to repeat the training I made in Russian in 2017, so I decided to repeat it but for 6 students only.

My main aim is to give you an opportunity to partially complete real application requirements from idea to implementation. No long lectures, tasks and challenges only under my control and with my help (ok, my useful hyperlinks). More performance – more results.

Oh, this is obvious – we are going to study. A lot.

It will be no customer – only year ideas and my skeptical view. Do not expect a lot of pure BPMN and UML because I’m a bit tired of them and analysis is mostly about your brain and analysis skills and techniques – not about just a diagram. I wish we could do an implementation and testing, but I believe we will have no enough developers in our group.

I’ll provide a at least 2 feedback sessions for all of your documents. This is not a boring theoretical course (if you need a boring one – you’ll get an access to this one available on click on this hyperlink). My feedback available in Telegram text form 8 am – 3 pm weekly and 4 pm – 8 pm weekly via Telegram or Skype conference. My time zone is PST.

My respect and reference via Linkedin. Why respect? Because it seem ok to me to remove people from group with no refund if they are not actively working for any reason (if you got intensive project at work – please do not apply). You’ll also get a free certificate from the Udemy.

It seem to me that 1-5 years of experience will be best to fit course entry requirements. All you need is a theoretical base.

Depends on your performance. i would expect 1-2 months.

I believe we should start in March.

Just apply via google form whenever it’ll be available. I’ll got 6 spots there only..

| # | Task | Days allocated |

| 1 | Problem Solving | 5 |

| 1.1 | Find at least 5 interesting problems you are able to solve with a small application | 3 |

| 1.2 | Analyze Problem (ishikawa diagram) | 2 |

| 2 | Vision | 10 |

| 2.1 | Problem Description | 2 |

| 2.2 | Stakeholders | 2 |

| 2.3 | Goals | 2 |

| 2.4 | Features (User Stories) | 2 |

| 2.5 | Context Diagram | 2 |

| 3 | Use Case | 21 |

| 3.1 | Use Case Diagram or Traceability Matrix | 5 |

| 3.2 | Use Case Description | 10 |

| 3.3 | Non-Functional Requirements | 2 |

| 3.4 | ER-model | 4 |

| 4 | Implementation Task | 18 |

| 4.1 | Database description | 7 |

| 4.2 | UI Prototype | 3 |

| 4.3 | UI Description | 5 |

| 4.4 | REST API description attempt | 3 |